https://tutorials.pytorch.kr/beginner/blitz/cifar10_tutorial.html

분류기(Classifier) 학습하기

지금까지 어떻게 신경망을 정의하고, 손실을 계산하며 또 가중치를 갱신하는지에 대해서 배웠습니다. 이제 아마도 이런 생각을 하고 계실텐데요, 데이터는 어떻게 하나요?: 일반적으로 이미지

tutorials.pytorch.kr

1. CIFAR10Dataset 정규화

0.5를 기준으로 정규분포를 따르도록 설정(Normalize)

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

class HyperParameters:

def __init__(self, batch_size=4):

self.batch_size_ = batch_size # 묶음 단위

class CIFAR10Dataset:

def __init__(self, hp: HyperParameters):

self.hp = hp

self.classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck') #분류할 종류

self.transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

self.data_path = './data'

def loadDatasets(self):

self.train_set = torchvision.datasets.CIFAR10(

root=self.data_path,

train=True,

download=True,

transform=self.transform

)

self.test_set = torchvision.datasets.CIFAR10(

root=self.data_path,

train=False,

download=True,

transform=self.transform

)

return self

def createDataLoaders(self):

if self.train_set is None or self.test_set is None:

raise ValueError("데이터셋이 로드되지 않았습니다. loadDatasets()를 먼저 호출하세요.")

self.train_loader = torch.utils.data.DataLoader(

dataset=self.train_set,

batch_size=self.hp.batch_size_,

shuffle=True,

num_workers=2

)

self.test_loader = torch.utils.data.DataLoader(

dataset=self.test_set,

batch_size=self.hp.batch_size_,

shuffle=False,

num_workers=2

)

return self

def getLoaders(self):

return self.train_loader, self.test_loader

# 이미지를 보여주기 위한 함수

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

if __name__ == "__main__":

hp = HyperParameters()

trainloader, testloader = CIFAR10Dataset(hp).loadDatasets().createDataLoaders().getLoaders()

# 학습용 이미지를 무작위로 가져오기

dataiter = iter(trainloader)

images, labels = next(dataiter)

# 이미지 보여주기

imshow(torchvision.utils.make_grid(images))

# 정답(label) 출력

print(' '.join(f'{hp.classes[labels[j]]:5s}' for j in range(hp.batch_size_)))

oupput:

bird frog deer plane

2. 합성곱 신경망(Convolution Neural Network) 정의

import torch.nn as nn

import torch.nn.functional as F

class ConvNet(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = torch.flatten(x, 1) # 배치를 제외한 모든 차원을 평탄화(flatten)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

if __name__ == "__main__":

hp = HyperParameters()

dataloader = CIFAR10Dataset(hp)

trainloader, testloader = dataloader.loadDatasets().createDataLoaders().getLoaders()

net = ConvNet()

3. 손실 함수와 Optimizer 정의하기

분류모델에서는 교차 엔트로피 손실(Cross-Entropy loss)과 모멘텀(momentum) 값을 갖는 SGD를 사용합니다.

그래서 하이퍼파라미터 클래스를 수정하여 명시하였고,

optimizer의 경우에는 net의 파라미터가 필요하기 떄문에 learning 클래스에 처리

class HyperParameters:

def __init__(self, batch_size=4, lr=0.001, momentum=0.9):

self.batch_size_ = batch_size

self.loss_func = nn.CrossEntropyLoss()

self.lr_ = lr

self.momentum_ = momentum

4. 신경망 학습하기

class learning():

def __init__(self, net, hp:HyperParameters):

self.net = net

self.criterion = hp.loss_func

self.optimizer = optim.SGD(net.parameters(), lr=hp.lr_, momentum=hp.momentum_)

def train(self, trainloader, num_epochs=2): # epoch = 2

for epoch in range(num_epochs):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

# 변화도(Gradient) 매개변수를 0으로 만들고

self.optimizer.zero_grad()

# 순전파 + 역전파 + 최적화

outputs = self.net(inputs)

loss = self.criterion(outputs, labels)

loss.backward()

self.optimizer.step()

# 통계를 출력

running_loss += loss.item()

if i % 2000 == 1999:

print(f'[{epoch + 1}, {i + 1:5d}] loss: {running_loss / 2000:.3f}')

running_loss = 0.0if __name__ == "__main__":

hp = HyperParameters()

dataloader = CIFAR10Dataset(hp)

trainloader, testloader = dataloader.loadDatasets().createDataLoaders().getLoaders()

net = ConvNet()

# 4. 학습

trainer = learning(net, hp)

trainer.train(trainloader)

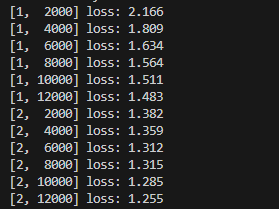

학습용 데이터셋을 2회 반복하여 신경망을 학습시켰다.

4-1. 학습한 모델을 저장

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)5. 시험용 데이터로 신경망 검사하기

신경망이 예측한 출력과 진짜 정답(Ground-truth)을 비교하는 방식으로 확인합니다.

만약 예측이 맞다면 샘플을 〈맞은 예측값(correct predictions)〉 목록에 넣겠습니다.

if __name__ == "__main__":

hp = HyperParameters()

dataloader = CIFAR10Dataset(hp)

trainloader, testloader = dataloader.loadDatasets().createDataLoaders().getLoaders()

net = ConvNet()

# 4. 학습

# trainer = learning(net, hp)

# trainer.train(trainloader)

# 저장 및 로드

PATH = './saved_models/cifar_net.pth'

# torch.save(net.state_dict(), PATH)

net.load_state_dict(torch.load(PATH))

# net.load_state_dict(torch.load(PATH, weights_only=True))

dataiter = iter(testloader)

images, labels = next(dataiter)

outputs = net(images)

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join(f'{hp.classes[predicted[j]]:5s}'

for j in range(4)))

if __name__ == "__main__":

gpu_manager = GPUManager()

device = gpu_manager.setDevice()

hp = HyperParameters()

dataloader = CIFAR10Dataset(hp)

trainloader, testloader = dataloader.loadDatasets().createDataLoaders().getLoaders()

net = ConvNet()

net.to(device)

# 4. 학습

# trainer = learning(net, hp)

# trainer.train(trainloader)

# 저장 및 로드

PATH = './saved_models/cifar_net.pth'

# torch.save(net.state_dict(), PATH)

net.load_state_dict(torch.load(PATH, weights_only=True))

dataiter = iter(testloader)

images, labels = next(dataiter)

# 이미지를 출력합니다.

imshow(torchvision.utils.make_grid(images))

outputs = net(images)

_, predicted = torch.max(outputs, 1)

print('Predicted: ', ' '.join(f'{dataloader.classes[predicted[j]]:5s}'

for j in range(4)))

correct = 0

total = 0

# 학습 중이 아니므로, 출력에 대한 변화도를 계산할 필요가 없습니다

with torch.no_grad():

for data in testloader:

images, labels = data

# 신경망에 이미지를 통과시켜 출력을 계산합니다

outputs = net(images)

# 가장 높은 값(energy)를 갖는 분류(class)를 정답으로 선택하겠습니다

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Accuracy of the network on the 10000 test images: {100 * correct // total} %')

# 각 분류(class)에 대한 예측값 계산을 위해 준비

correct_pred = {classname: 0 for classname in dataloader.classes}

total_pred = {classname: 0 for classname in dataloader.classes}

# 변화도는 여전히 필요하지 않습니다

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predictions = torch.max(outputs, 1)

# 각 분류별로 올바른 예측 수를 모읍니다

for label, prediction in zip(labels, predictions):

if label == prediction:

correct_pred[dataloader.classes[label]] += 1

total_pred[dataloader.classes[label]] += 1

# 각 분류별 정확도(accuracy)를 출력합니다

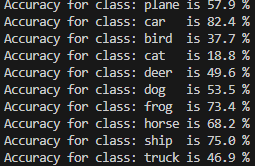

for classname, correct_count in correct_pred.items():

accuracy = 100 * float(correct_count) / total_pred[classname]

print(f'Accuracy for class: {classname:5s} is {accuracy:.1f} %')

6. GPU에서 학습하고, 블록화시키기

nvidia-smi로 진행상황 볼 수 있음

class ModelEvaluator:

def __init__(self, net, dataloader, device):

self.net = net

self.dataloader = dataloader

self.device = device

@staticmethod

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

def show_predictions(self, images):

# 이미지를 GPU로 이동

images = images.to(self.device)

# 예측 수행

outputs = self.net(images)

_, predicted = torch.max(outputs, 1)

# CPU로 다시 이동하여 표시

images = images.cpu()

predicted = predicted.cpu()

self.imshow(torchvision.utils.make_grid(images))

print('Predicted: ', ' '.join(f'{self.dataloader.classes[predicted[j]]:5s}'

for j in range(len(predicted))))

# 모델 전체 평가

def evaluate(self, testloader):

correct = 0

total = 0

with torch.no_grad(): # 학습 중이 아니므로, gradient 계산 안함함

for data in testloader:

# images, labels = data

images, labels = data[0].to(self.device), data[1].to(self.device)

outputs = self.net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

return 100 * correct / total

# 모델 분류기별 평가

def evaluate_by_class(self, testloader):

correct_pred = {classname: 0 for classname in self.dataloader.classes}

total_pred = {classname: 0 for classname in self.dataloader.classes}

self.net.eval() # 평가 모드로 설정

with torch.no_grad():

for data in testloader:

# images, labels = data

images, labels = data[0].to(self.device), data[1].to(self.device)

outputs = self.net(images)

_, predictions = torch.max(outputs, 1)

for label, prediction in zip(labels, predictions):

if label == prediction:

correct_pred[self.dataloader.classes[label]] += 1

total_pred[self.dataloader.classes[label]] += 1

results = {}

for classname, correct_count in correct_pred.items():

accuracy = 100 * float(correct_count) / total_pred[classname]

print(f'Accuracy for class: {classname:5s} is {accuracy:.1f} %')

results[classname] = accuracy

return results

if __name__ == "__main__":

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

hp = HyperParameters()

dataloader = CIFAR10Dataset(hp)

trainloader, testloader = dataloader.loadDatasets().createDataLoaders().getLoaders()

net = ConvNet()

net.to(device) # GPU

# 4. 학습

# trainer = Learning(net, hp)

# trainer.train(device, trainloader)

# 4.1 경로 설정 및 저장

PATH = './saved_models/cifar_net.pth'

# torch.save(net.state_dict(), PATH)

# 로드

net.load_state_dict(torch.load(PATH, weights_only=True))

# Prediction and evaluation

dataiter = iter(testloader)

images, labels = next(dataiter)

evaluator = ModelEvaluator(net, dataloader, device)

# 수정된 평가 호출

evaluator.show_predictions(images)

overall_accuracy = evaluator.evaluate(testloader)

print(f'Accuracy of the network on the 10000 test images: {overall_accuracy:.1f}%')

class_accuracies = evaluator.evaluate_by_class(testloader)

7. 하이퍼파라미터 조정 후 모델 성능 개선

batch_size : 4 -> 32

epoch : 2 -> 30

test_accuracy 증가

class HyperParameters:

def __init__(self, batch_size=32, lr=0.001, momentum=0.9, num_epoch=30):

self.batch_size_ = batch_size

self.loss_func = nn.CrossEntropyLoss()

self.lr_ = lr

self.momentum_ = momentum

self.epoch_ = num_epoch

'AI > Model' 카테고리의 다른 글

| [Model] MNIST 문자 인식 모델 구조화 및 설명 (1) | 2025.01.18 |

|---|---|

| [Pytorch] MNIST 문자 인식 모델 (2) | 2025.01.10 |